By TREVOR HOGG

By TREVOR HOGG

There was a time when the audience was left to fill in the visual gaps with their imagination when attending live events, but this is no longer the case as stage productions have become technologically sophisticated and proficient in incorporating visual effects as part of the performance. This also applies to broadcast coverage, which has elevated the use of screen graphics by leveraging the tools of augmented reality. Recognizing the growing demand, companies such as Halon Entertainment, which is normally associated with creating visualization for film and television projects, have expanded into the world of virtual design for the likes of ESPN and Coachella.

“[Y]ou are starting to see the crossroads of everything coming together. That being visual effects heading towards games, AR, VR and broadcast because of USD and all of the programs finding good ways to work together.”

—Jess Marley, Virtual Art Department Supervisor, Halon Entertainment

“For the past two, two and a half years, you are starting to see the crossroads of everything coming together,” states Jess Marley, Virtual Art Department Supervisor at Halon Entertainment. “That being visual effects heading towards games, AR, VR and broadcast because of USD and all of the programs finding good ways to work together. [The virtual news desk for] ESPN is a good intersection to that because we’re using Jack Morton’s Cinema 4D files to introduce content into Unreal Engine and having to bridge the gap between all of those different departments working together, which is what virtual production does across the board.” The configuration resembles a LEGO set. “You are putting the pieces together in Unreal Engine, but you are getting pieces from different kits and packs from the client,” remarks Andrew Ritter, Virtual Art Department Producer at Halon Entertainment. “You have to make sure that they plug into the technology and creative vision sides and go directly into the audience’s eyeballs at the end of it.”

Nothing was radically different for ESPN. “ESPN used the same game setup process, meaning you had to look at your assets, plan out UVs and light maps to make sure that things are going to take in light and shadow accordingly and to bake things down,” Marley explains. “Because in order for things to run in real-time and still have dynamic elements as far as media screens and different inputs, the rest of the scene had to be light. We’re also talking about glass and different layers of glass or frosted glass. Different things that are SportsCenter-related that need to be built. It was a careful curation of materials running efficiently but also looking a certain way. It also had inputs that you could control versus things that needed to catch light at a certain distance, so you put specific bevels on things.” The screen graphics were a combination of old and new techniques. “We are emulating the depth monitor for SportsCenter, so we are putting those graphic feeds into a screen that is in the fake world but has to look like a real screen in the real world,” Ritter states. “Initially, we asked how many feeds were needed back there because every frame you add is going to drop our framerate and affect the finished quality. Originally, they were like, ‘Four.’ Then, we had a meeting with the director’s team at SportsCenter who said, ‘We need 16.’ It all worked out in the end.”

”It’s not about comparing. It’s about using the contemporary language of expression that includes special effects, virtual reality and Unreal Engine to express something that needs to be expressed.”

—Igor Golyak, Founder and Producing Artistic Director of Arkelin Players Theater and Zero Gravity Virtual Theater Lab

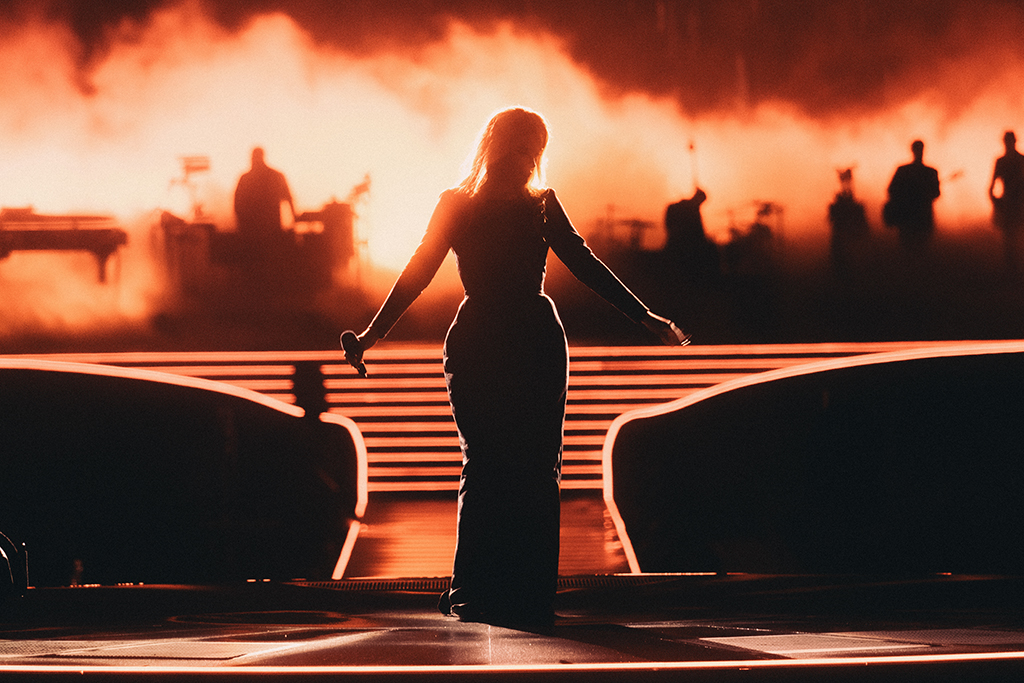

Preparation allows for adaptability. “Coachella was a good example of us creating AR content for a live concert that was being streamed live, and all of our stuff went off without a hitch, but they were pushing it,” Ritter remarks. “They were making changes up to the last minute, but we had rehearsals and got to see it on the stage through the cameras before the actual shoot started. We had to test everything to make sure we had plenty of space as far as our framerate. We were communicating with the directors’ team to let them know which angles should be on ahead of time. And we rolled with it.” The process for producing content for a studio or concert setting is similar. “The only thing you have to consider is in-screen versus out-of-screen,” Marley observes. “All we’re doing for ESPN content is building in-screen 3D, so we’re moving the content behind the screen for the most part. For the Coachella stuff, it’s out-of-screen and is AR, so it’s separate as far as the use case, but the content still needs to run at the same pace and as flexible and optimized because the camera has to be live, and all the content needs to move whatever the tracking it’s going into. But it’s all the same 3D content.”

Working within the same environment is Disguise, which has collaborated with artists such as Adele and Shania Twain on their concert tours, as well as the stage play spin-off Stranger Things: The First Shadow. “One might be a TV show that has been adapted to the stage at the West End, and another might be a massive concert tour,” notes Emily Malone, Head of Live Events at Disguise. “It’s all about how you can best realize those ambitions, and that comes down to flexibility, stability, reliability and how quickly you can achieve something.” Mixing various content together is commonplace. “You have capturing inputs, pre-rendered content, real-time content increasingly, and being able to lay all of those together is becoming normal. A lot of the work that allows that to happen occurs when people have meticulous pre-production workflows. There’s so much that happens beforehand to make sure once you get into the room, you’re set up for success. Previsualization and content distribution are huge parts. There are more traditional tools from the film post-production world making their way into making content for live events. We’re pulling technologies from all of these different places. A game engine is another tool in the arsenal. Even if you’re not doing real-time content, Unreal Engine might be the tool you want to use to make the content and render it out from there because you get a different aesthetic, or your render time is different.”

“Coachella was a good example of us creating AR content for a live concert that was being streamed live… but they were making changes up to the last minute. [However], we had rehearsals and got to see it on the stage through the cameras before the actual shoot started. We had to test everything to make sure we had plenty of space as far as our framerate. We were communicating with the directors’ team to let them know which angles should be on ahead of time. And we rolled with it.”

—Andrew Ritter, Virtual Art Department Producer, Halon Entertainment.

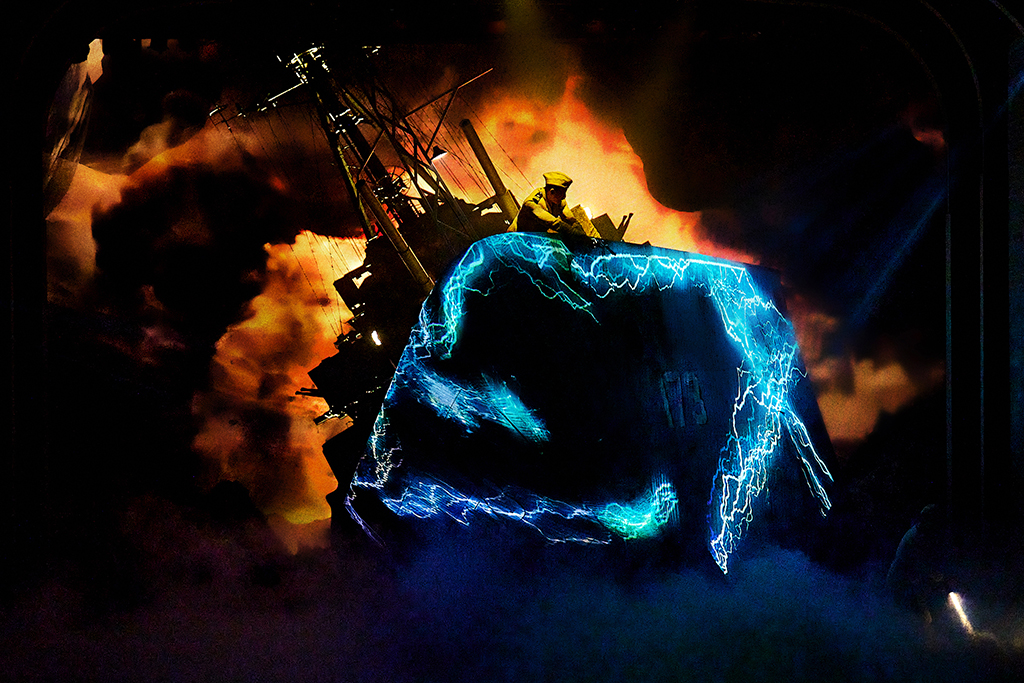

Lighting and video departments are dependent on each other. “It might be for a portion of a show that the light team is handed control of video fixtures so they can do something tightly integrated in this one section. Then, there might be parts where video maps to lighting fixtures,” Malone remarks. “There is a tight collaboration between those two teams. For Stranger Things: The First Shadow, you would be hard-pushed to tell what bits come from what department. It can be incredibly immersive and engaging to the point where you don’t have to do much work at all to suspend the disbelief. It happens to you. There have been technological changes that have helped with that. Things like denser pixel pictures and higher resolution; things are getting bigger, and it is becoming cheaper to do those things. Higher color fidelity as well because there is a lot more color accuracy work going on and, subtly, that can be achieved, and the flexibility you get with that. You’re able to do more compositing and do more integration with the things that are happening on stage.”

Creating an interactive experience that allows artists to expand the scope of their vision is something that the Museum of Modern Art facilitates. “At the core of our department’s mission is a strong desire to support our artists who are deeply informed by histories of experimental cinema, live performance, early video art, artwork responding to the media, and artists seeking to break down boundaries between genres and create something entirely new,” remarks Erica Papernik-Shimizu, Associate Curator, Department of Media and Performance at MoMA. “That’s something that feeds our desire to create the Kravis Studio.” The Marie-Josée and Henry Kravis Studio has the appearance and capability of a black box theater. “But really that facility is flexible rather than a dedicated purpose with frontal-facing time-based work,” notes Paul DiPietro, Senior Manager, Audio Visual Design and Live Performance at MoMA. “That draws from the traditional theatre where you have to change things quickly, or you can do something that is time-based and change a look with lighting, sound, projected image and performers. When you start talking about theatre or art or installation, it’s all blurred. It has more to do with the public’s perception of it than the actual creation of the art.”

“At Pre-Kravis Studio, we would think about: do we need to bring in a sprung floor, put down Marley and install power and projectors?” states Lizzie Gorfaine, Associate Director and Producer of Performance and Live Programs at MoMA. “It was such a huge production. The thing about the studio is that when you get a proposal, there are things available for you to use much more readily than at any other point in the history of our museum. The fact that there is a tension grid where you can affix projectors, lighting and speakers at any point in the ceiling is unheard of for a regular museum gallery. Our seating situation is much more flexible. There is a blackout shade that exists on the southside where we can cover this huge window that looks out onto 53rd Street, which means in a matter of seconds, we have a light-locked room where we can achieve productions of the scale [required for the ‘Shana Moulton: Meta/Physical Therapy’ exhibition]. The thought initially around creating a space like this was that we could even consider these kinds of proposals and be able to achieve that level of production, which would have been much more costly and time and resource-intensive than before.”

Arlekin Players Theater and Zero Gravity Virtual Theater Lab are experimenting with technology but not to point of merging film and theatre. “The point is to continue their own path of development,” observes Igor Golyak, Founder and Producing Artistic Director of Arlekin Players Theater and Zero Gravity Virtual Theater Lab. “There are some things that are a crossover, but for me, they are a substitution. Creating something filmic onstage is not an ideal way of using the stage and vice versa. I was watching Poor Things and how interesting, imaginative and theatrical it is. It’s quite stunning what they achieved. Sometimes, things that aren’t overly realistic in theatre are more difficult to do in film; they can make the audience members [react] more [in theatre] than in a realistic filmic way of expression. It’s a different way of affecting the audience, and both work. It’s not about comparing. It’s about using the contemporary language of expression that includes special effects, virtual reality and Unreal Engine to express something that needs to be expressed”

The coronavirus pandemic had a significant impact. “Before the pandemic, I had a studio where we fit 55 to 60 people,” Golyak explains. “My virtual production theatrical shows that are live were presented simultaneously in 55 countries. Connecting to a wider set of audience is huge. It’s a different experience, and unlike a movie, they’re experiencing it live, and their interaction counts. They’re not passive listeners or watchers. They provide input into what is happening onstage or on a virtual stage and make choices. It lets me connect with more audiences and lets me connect the audience members to each other who otherwise wouldn’t have connected. There is a sense in a live theatre room where we are all sitting together, and if it’s inspiring and something incredible, we start to breathe together and our heartbeats align. There is a sense of community that comes from using contemporary tools to express the soul.”