By TREVOR HOGG

By TREVOR HOGG

A fascinating aspect of the visual effects industry is that the final product is dependent upon technicians and artists working together; however, both of these groups speak and think in different ways. Yet, they have the mutual goal of creating imagery that allows filmmakers to achieve their creative ambitions and fully immerse audience members in the storytelling. The traditional visual effects paradigm is being fundamentally altered as AI and machine learning streamline how imagery gets created and produced. There is no shortage of opinions on whether this new reality will be empowering or the death knell for creativity.

“In many ways, creators and AI are evolving together,” states Paul Salvini, Global Chief Technology Officer at DNEG. “AI is becoming more capable of creating more controllable outputs, and creators are becoming more familiar with using AI to augment their existing tools and workflows. It will take a while for visual effects workflows to be reshaped, as the AI toolset for visual effects is still in its infancy. It’s exciting to see new ways of interacting, such as natural language interfaces, emerging within the field. As our chosen tools become more intelligent, we can expect to see more human interactions being about expressing creative intent and less about the mechanics of execution.” The one thing that distinguishes high-end from mainstream content creation is the need for complete creative control. “The lack of controllability of AI is frustrating for many professional users. It’s difficult to tweak subparts of a generated image that is close but not yet there. As we develop a new generation of AI tools for artists, the artist experience and the enhancement of creative control are paramount,” Salvini says.

Every technology has its pros and cons. “AI has been a controversial technology because of concerns over how models are trained [the source of data and the rights of the artists] and what AI might mean for various jobs in the future. We need to be extremely responsible and ethical in how we develop and deploy AI technologies,” Salvini remarks. The rapid advancement of AI technologies has been impressive. “The high level of investment in AI has resulted in a major leap in capabilities available to end users. Like all new technologies, I’ve learned that AI takes time to mature and be integrated into existing tools and workflows.” The biggest challenge has been the hype. “The high-end of visual effects requires a level of creative control that isn’t available with most of today’s AI tools. There is a general frustration that the consumer tools for AI are advancing far more rapidly than those for the professional market,” Salvini says. Misconceptions have become a daily occurrence. “Depending on the conversation, its impact is often dramatically underestimated or overestimated. There is a sense that AI will transform workflows overnight when that simply isn’t the case.” Everything centers around bringing great stories to life. Salvini states, “AI brings a set of capabilities that will expand our toolset for making that happen. As the technology in our space evolves [and not just through AI], our focus needs to remain on making it easier to focus on the creative value we add.”

[AI] is wrong frequently enough to remind me to keep thinking and to make sure I use it to challenge me as much as I remind myself to keep challenging it.”

—Steve MacPherson, CTO, Milk VFX

AI models in their best form serve as a force multiplier and/ or mentor. “For senior personnel, it allows them to either iterate certain ideas faster and then curate the results, choosing which show the best potential for further development,” notes Steve MacPherson, CTO at Milk VFX. “In other cases, it can open up increased understanding across different disciplines, which increases the breadth of activity for that individual. Finally, it can serve as a mentor, allowing the mentee to zero in on specific areas of questioning in exploring any given topic.” Currently, AI has not moved beyond impacting the workflow for conceptualization. “Under the hood on the development side, there are times when it can assist with code-based analysis or initial idea prototyping. I say this cautiously for a couple of reasons. First, any code that goes into production should be peer reviewed. Second, a large part of the development job is ensuring the code base is stable and maintained, and I am wary of introducing machine-generated technical debt.” AI is a useful companion. “I test ideas against it. I use it to challenge assumptions. I use it to increase breadth of understanding or as a refresher on topics I’m already familiar with. I’ve learned a new-found sense of confidence in tackling complex topics. Not that I can understand them quickly, but that I can chisel away in a method that works for how I learn. It’s brought a real and genuine sense of fun and exploration to the work I do,” MacPherson says.

“The pros are the extended range AI can offer the individual,” MacPherson notes. “The ability to gather basic information quickly. To learn at your own speed in a manner that suits your style of learning. The cons, and I am always on guard against this for myself and others, are to let the tool do the work for you. By this, I mean the critical thinking, the following of intuition, the understanding of the individual pieces and how they fit into the whole. For me, the emphasis on building a team is on building human character and identifying people whose principles around effort and knowledge are aligned with the company’s goals.” Some of the results have been unexpected. MacPherson says, “I have dumb questions. We all do. Things we should know but for whatever reason don’t. These LLMs are a path to asking those dumb questions without fear of judgment. It gets them out of the way and plugs gaps in knowledge quickly and efficiently. It’s wrong frequently enough to remind me to keep thinking and to make sure I use it to challenge me as much as I remind myself to keep challenging it.” The hype has to be ignored, as what is important is staying focused on real-world solutions. “It’s both oversold and undersold. GenAI is currently a novelty act that might progress out of the basement and into daylight. Maybe. I’m banking on the need for humans. They are language models, and the limits of their ability are the limits of our language. As language models, the insights they can give us into how we think and express ourselves are incredibly insightful and rewarding for me as a career technologist.” Joe Strummer of the Clash once declared, ‘The future is unwritten.’ MacPherson adds, “It’s perfectly human that the most obvious and succinct summation of our times comes from a ‘70s punk whose band rebelled against the complacency and excesses of the music industry!”

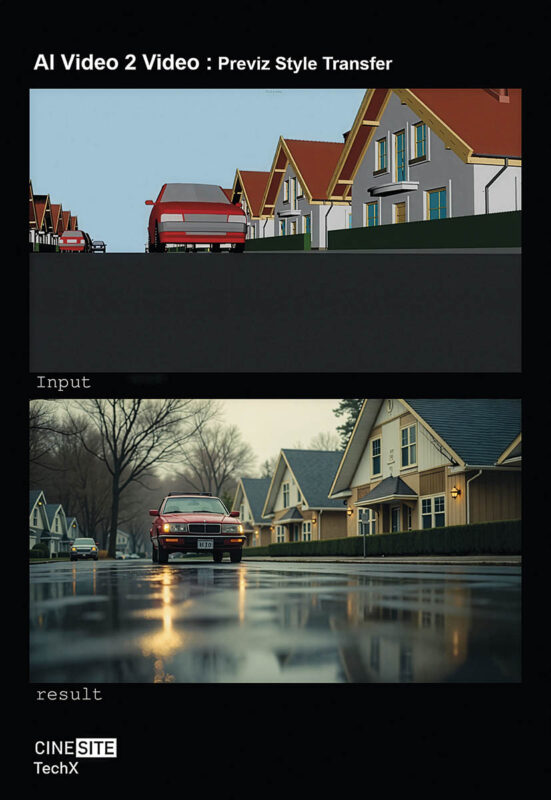

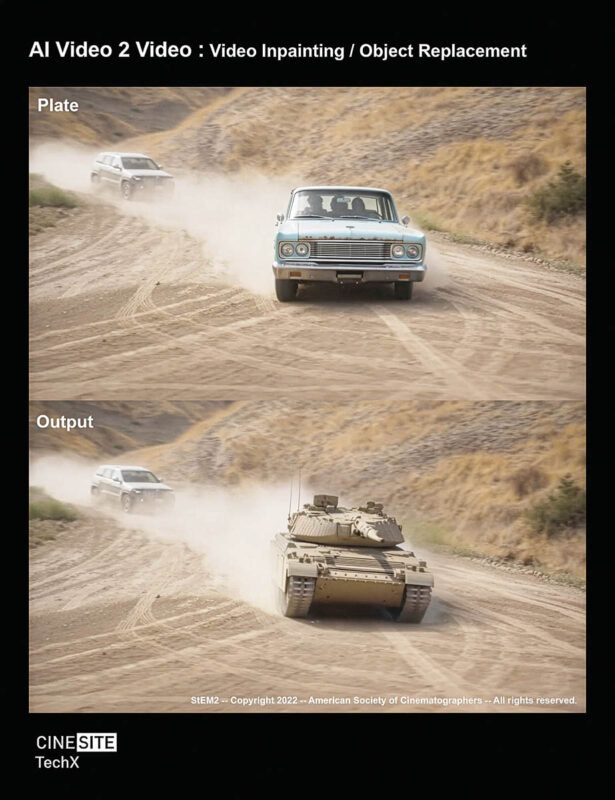

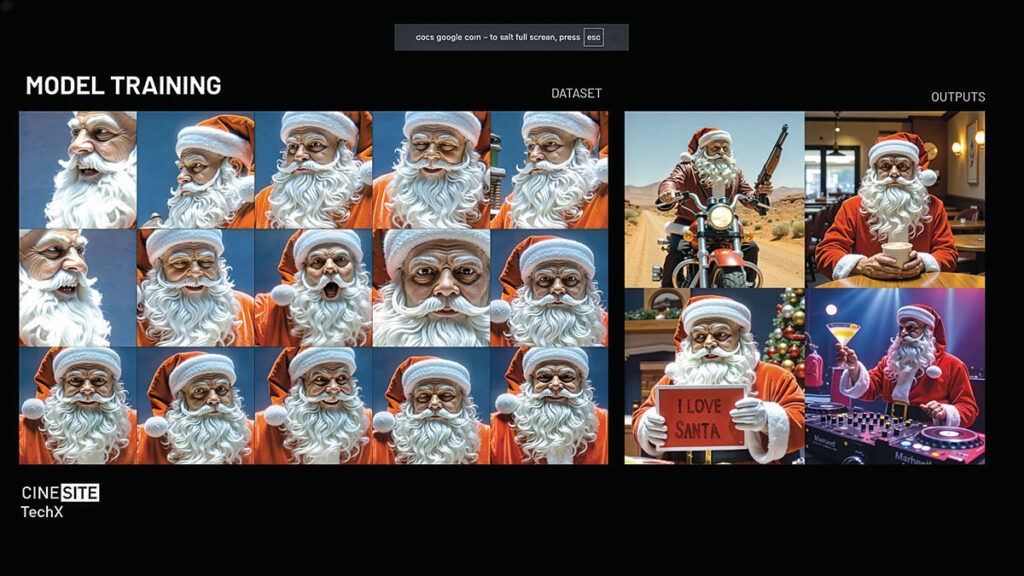

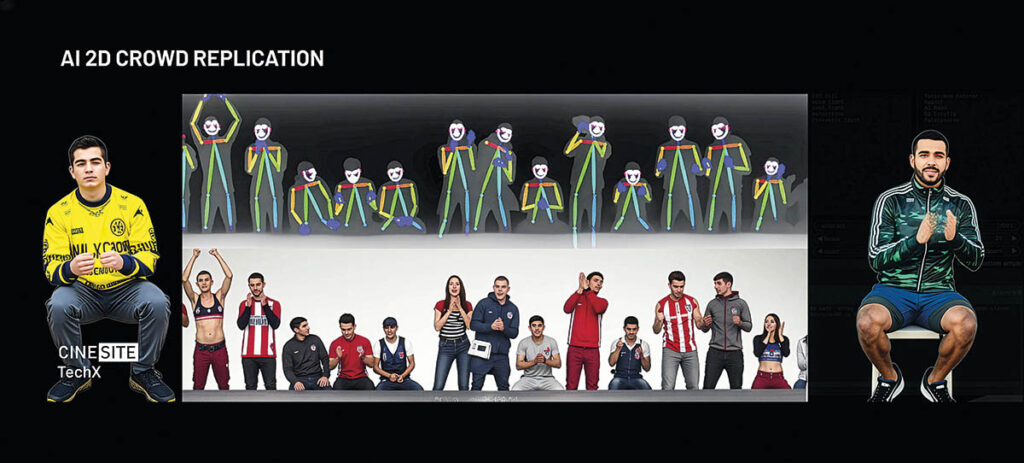

Generative AI is not going away, leading Cinesite to establish an internal technological exploration unit called TechX. “We’re a non-production unit, so we’re not using the technologies directly on productions at the moment,” explains Andrew McNamara, Generative AI Lead at Cinesite. “It allows us to inform ourselves, our team, different departments and senior management about the potential of how these things can be used. One part is to increase efficiency in certain areas, and the second is to allow us to amplify creatively what we’re doing and what it means in the longer term in the wider content creation ecosystem. There is a lot of fear around whether you can do a whole shot with a generative AI workflow. We’re trying to figure out where that sweet spot is. You have one end stating, ‘We’re going to replace everything.’ Then there will be tangible use cases in visual effects or an animated production where a lot of the technologies can be utilized to support mundane, tedious, time-consuming and expensive things that aren’t creatively driven but can give us an advantage.” There was a time when a picture was worth a thousand words, but now you need a thousand words to generate an image. McNamara says, “It’s about having a human in the loop. Having AI as a collaborator, not something to replace you, is important. For example, you want to leverage the ideas and skills of concept artists to generate stuff. But you have a client who wants to see 20 versions of the same thing tomorrow. That’s a lot of pressure on a concept artist to turn around. You could argue that AI gives concept artists more options to support that accelerated workflow.”

Hybrid workflows are where everything is headed. “We will probably see new tools and technologies to support artists and creatives that probably haven’t been invented yet that take different mentalities of how we interact with the AI,” McNamara believes, “but also using traditional things like hand-drawn and concept art. It will end up somewhere in the middle in the future.” AI has already taken on the role of Google Assistant. “If you start to look at some of the large language models that are getting integrated into some of the digital content creation tools, it’s there to support you. You can use ChatGPT, feed it some artwork you’ve done or a photo, and ask, ‘Can you show me if this had a green horn on the right-hand side?’ You’re supplying it with some starting points and getting it to give you some options. The more you delve into these kinds of tools, even Midjourney has some quite interesting tooling at the moment to allow you to have these interactive dialogues. You can reject what it gives you. It’s not the end-point but part of a process. You still have the element of choice to say if you want it to do certain things. It’s not fully automated. Maybe we’ll eventually see, in five-or-10 years’ time, haptic stuff where you’re physically sculpting a whole range of things in collaboration with voice and prompts. It potentially allows the artist to choose which of those mentalities best suits how they want to work. It won’t just be prompts in the future. It might have a 3D component where you use some of the basic 3D tools to specify your compositions.” The visual effects industry is the business of manufacturing beautiful imagery. McNamara notes, “You could argue that it’s a homogeneous way of doing the work. Things like specialism within that are quite interesting. Do we think that specialists will be less important if you could have a visual effects or creative generalist who can do more and be augmented with AI? I don’t have the answer for that question.”

Terms are being misused. “I even hate using the phrase AI because I don’t think that there’s a lot of intelligence in these tools,” reveals Kevin Baillie, Vice President, Head of Studio at Eyeline. “They’re like rocket fuel for people who are less technical and more creative. However, we still need technicians to help ensure that these things can work at scale and fit into normal production processes.” The role of the technician will change. “Some of them will all of a sudden find themselves in a position of being a creative sitting next to one of their favorite directors on the planet, like I did when I started working with Robert Zemeckis,” Baillie says. There are misconceptions. “We have a narrative out there from some of the bigger tech companies that are creating these tools, like ‘prompt in and movie out,’ and that’s far from the truth. There are some instances, such as early concept ideation, where it can add details that otherwise would take forever to do. You might have an image of a creature from a piece of traditional concept design or something modeled in ZBrush and use a tool like Veo3, Runway or Luma AI to make it move. You can discover things from that process, which you wouldn’t have thought of, these happy accidents. By and large, they are just tools, especially when it comes to doing final pixel work. We spend more time fighting the tools to get them to be more specific in their output and have output fidelity. In cases like the Argentinian series The Eternaut, which had a few fully generative shots, they started with a low-resolution version of an apartment building in a city block in Argentina to make this building collapse. The inputs were very much curated in the same way you would if you were doing previs or postvis. A lot of the things in the middle were using various models to restyle that very low-resolution early image. That was then used to feed a video diffusion model to make it move right. The process was highly iterative and highly guided by artists exactly as the visual effects process would be now.”

Workflows are going to change fundamentally. “When we’re looking at these workflows, it is important to embrace the powerful things about them,” Baillie notes. “In a traditional visual effects workflow, there’s an artistry that is separated by technical steps. Whereas, in the generative image and video creation process, a lot of those steps are combined. You’re going to get the best result out of an image generation if you can give the diffusion model everything it needs to know to make that image in one go. There are limits to that, but as we think about how to best leverage these tools, the steps will be fundamentally different from those in a traditional visual effects workflow. Could we have an instance come with UI living within Nuke, that you do an input into, and then there’s a whole generative, specific workflow within a node, and it pipes out the output? That would probably be a comfortable thing for visual effects artists to wrap their heads around. There is space for that. However, it is important not to let the traditional visual effects steps and mechanisms get in the way of potential opportunities the tools offer.” Baillie addresses a sense of déjà vu. “I will remind anybody paying attention that it feels like the 1990s to me, when there were many unsolved problems, such as digital humans, water and fire. No one tool did it all. You had to take all these disparate tools and work with smart people to figure out how to combine them all to get beyond each one’s limitations to then arrive at a result that the filmmaker wants, all in service of telling a great story.”