By CHRIS McGOWAN

By CHRIS McGOWAN

Innovation and integration continue to push virtual production into new realms of possibility, both on and beyond the LED wall. “Virtual production is a large umbrella term, and the more visibility we can shine on the most cost-effective workflows and toolsets within that umbrella, the further we will be able to push it,” comments Connor Ling, Virtual Production Supervisor at Framestore. “At Framestore, we’ve already made big strides in integrating the outputs and workflows of virtual production into the traditional VFX and show pipeline, which will also increase confidence in the creatives to harness it.”

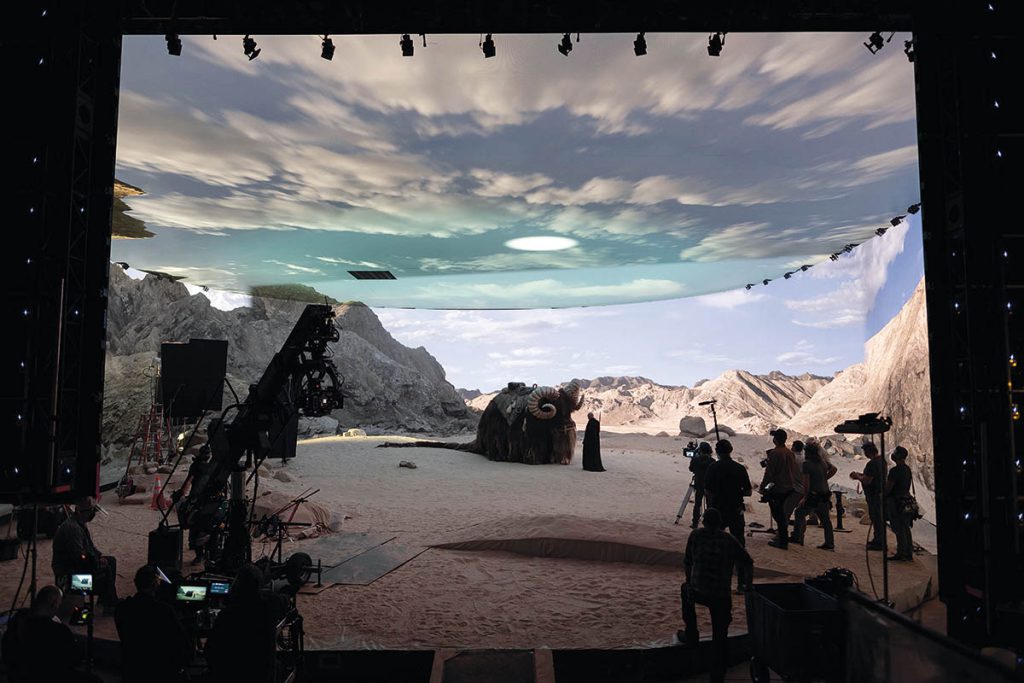

The next phase is about simplicity and scale, according to Tim Moore, CEO of Vū Technologies. “We’re focused on making virtual production more accessible, not just to filmmakers and studios, but also to brands and agencies that need to produce smarter. That means streamlining the workflow, building better tools, and connecting the dots between physical and digital in a way that feels intuitive. We’ve seen a lot of evolution since we started on this journey in 2020, and don’t expect that to stop anytime soon as the broader industry continues to change.” Regarding virtual production and LED volumes, Moore adds, “Some of the biggest innovations haven’t been with the LED itself but in what surrounds it. Camera tracking has become more accessible. Scene-building is faster thanks to AI and smarter asset libraries. We’re also seeing tools like Sceneforge that help teams previsualize entire shoots before they step onto a stage. The focus now is on removing friction so the technology disappears and creativity takes the lead.”

“We continue to see nexus points develop between different flavors of VP tools and techniques, where combining them and the artists that specialize in them pushes everything forward.”

—Justin Talley, Virtual Production Supervisor, ILM

Framestore has always had a broad view of what constitutes virtual production, according to Ling. “Yes, shooting against LED walls is virtual production, but virtual production is not shooting against LED walls. LED is one tool in our wider suite [called Farsight, which also encompasses v-cam, simulcam and virtual scouting] and, like any solution, each tool has applications where it shines and others where one of our other tools may be more applicable. When we talk about this suite of tools, they’re not just being used at the beginning of production, but at every stage of the production life cycle.”

Ling explains, “Our clients are using Farsight more and more, sometimes as standalone elements, sometimes, as with projects like How to Train Your Dragon, as part of a full ecosystem that starts at concept stage, runs through previs, techvis and postvis, and on into final VFX.” Paddington in Peru, Wicked and Barbie also utilized Farsight. “The biggest innovation has been in terms of making this system scalable, fully integratable and, perhaps most importantly, utterly intuitive – we’ve seen filmmakers who’ve never used the kit go from zero to 60 with it, and their feedback has allowed us to refine the tech and tailor it to their specific needs, almost in real-time.” Ling adds, “In broader terms, one of the key changes we’ve seen is more complete, more relevant data being captured on set, which makes it far better when it comes back to VFX. This is less about innovation, per se, than users becoming more adept at using the technology they have at their disposal.”

He Sun, VFX Supervisor at Milk VFX, notes, “We’re moving toward a more emerging creative process where real-time tools are not only used on set, but also play a vital role from early development through to post-production and final pixel VFX delivery. As machine learning continues to advance, it will work more closely with real-time systems, further enhancing creative collaboration, accelerating iteration and unlocking new possibilities for storytelling.” Sun continues, “We’re also seeing a shift toward greater innovation in software development driven by increasingly powerful GPU hardware. Real-time fluid simulation tools, such as Embergen and Liquidgen, enable the generation of volumetric, photorealistic smoke, fire and clouds, which can then be played back in real-time engines. Innovation is also expanding beyond Unreal Engine. For example, Chaos Vantage brings real-time ray tracing to LED wall rendering.” Other notable advancements include Foundry’s Nuke Stage, which enables real-time playback of photorealistic environments, created in industry-standard visual effects tools like Nuke, onto LED walls, according to the firm. Sun also points to Cuebric, “which leverages generative AI to create dynamic content directly on LED volumes.”

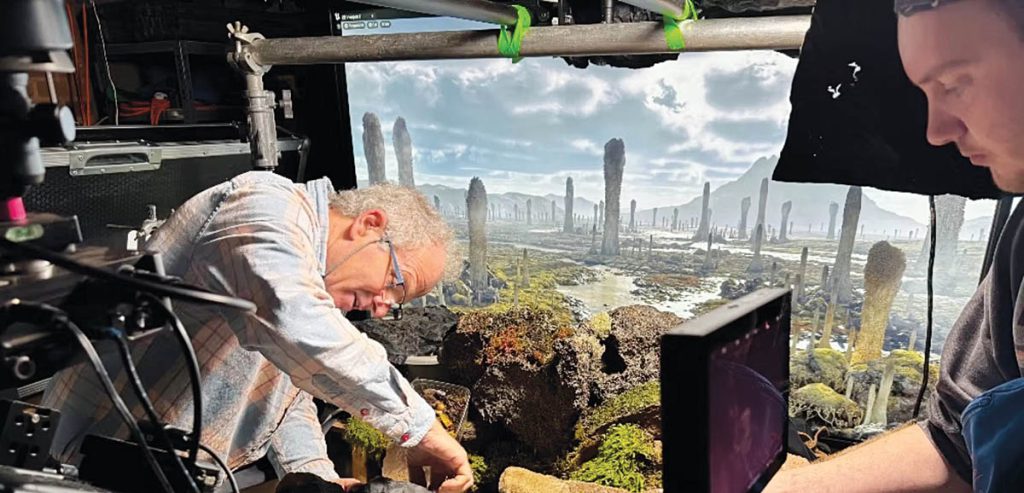

According to Moore, Hybrid pipelines are essential. He explains, “Teams need the flexibility to mix practical, virtual and AI-powered workflows. The key is making those handoffs smooth and accessible without requiring a massive team of specialists to operate the system.” Sun remarks, “At Milk VFX, I designed our real-time pipeline using a modular approach to support flexibility and scalability across a range of projects. This pipeline was built and refined during two major factual series. Our real-time VFX workflow primarily supports two types of VFX shots: full CG shots and hybrid shots. In hybrid shots, certain elements, such as characters, environments, or FX, are generated through the Unreal Engine pipeline, while others are rendered using our Houdini USD pipeline. These elements are then seamlessly integrated with live-action plates during compositing.” Sun continues, “Assets are designed to be dual-format compatible, making them interchangeable between Houdini and Unreal Engine. This allows for efficient asset reuse and smooth transitions across departments. A key part of our system is a proprietary plug-in suite focused on scene import/export and scene building. This enables artists to move assets and scene data fluidly between Unreal, Maya and Houdini. For instance, animators can continue working in Maya using scenes constructed in Unreal, then reintegrate their work back into Unreal for final rendering. We’ve also developed an in-house procedural ecosystem generation tool within Unreal, allowing artists to create expansive landscapes efficiently, such as prehistoric forests and sea floors. For rendering, we use both Lumen and path tracing depending on the creative and technical needs of each shot, with final polish completed by the compositing team to achieve high-end visual fidelity.”

Sun adds, “When implementing real-time technology as part of the visual effects pipeline, one of the biggest challenges beyond the technology itself is the necessary shift in mindset from traditional workflows. For example, I bring the compositing team into the process much earlier, involving them directly in Unreal Engine reviews. At the same time, I treat the Unreal team as an extension of the comp team, as they work closely together throughout production. Having both teams collaborate from the start fosters a more integrated and creative environment. This synergy has proven to be a game-changer, enabling us to iterate on shots far more quickly and efficiently than in traditional pipelines.”

Justin Talley, Virtual Production Supervisor at ILM, comments, “One of the areas we’ve focused on over the years is ensuring we can seamlessly move between VP/on-set and post-production VFX contexts. A recent development has been seeing all the work we put into developing custom rendering and real-time workflow tools for on-set now make its way more broadly into traditional VFX work as well.” Talley notes, “We’ve continued to develop our simulcam toolsets and related technologies like real-time matting, markerless mocap and facial capture technologies. Similarly, I think some of the work we’ve done around IBL [image-based lighting] fixture workflows has an interesting future when combined with simulcam to give you some of the benefits of LED volume VP for scenarios that don’t warrant a full LED volume.” Talley adds, “The latest gen LED fixtures are shipping with profiles optimized for a pixel mapping implementation – some of them with video color space – that allow VP teams to map content onto these powerful, broad-spectrum light fixtures.”

Regarding AI-driven volumetric capture, Theo Jones, VFX Supervisor at Framestore, observes, “We’ve seen a lot of tests, but nothing that feels like it’s anywhere near production-ready at this stage. As impressive as an AI environment might look once you put it on your volume, the key issue is still the inability to iterate, adjust, or make changes in the way any DP or director is going to ask you to do. It’s an interesting new tool, but they need to add more control for it to become genuinely useful.” Ling notes, “In a funny way, it’s not dissimilar to the LED boom a few years back. Some people are selling it as an immediate ‘magic bullet’ solution, but in reality, you’re not hearing about the time that goes into creating the imagery, let alone the work that goes in afterwards to bring it up to spec.” Talley comments, “Gaussian Splatting is a compelling technique for content creation. The benefits of quick generation and photo realism are hard to ignore. There are still several limitations, but the speed at which these tools are maturing is impressive.”

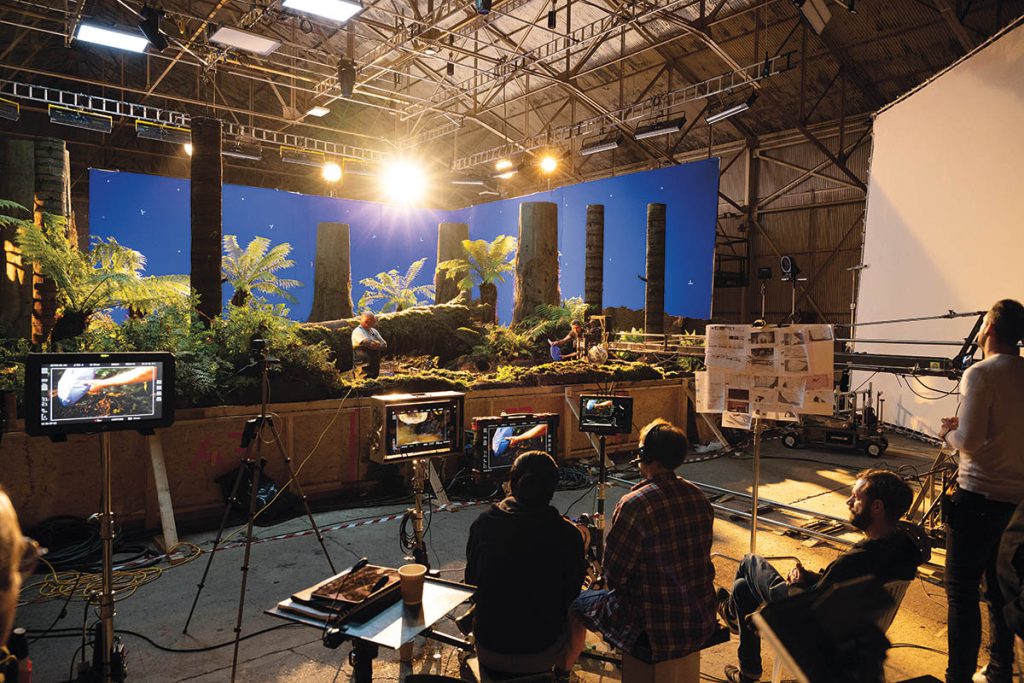

Greenscreens are still relevant. Ling explains, “As with most things within virtual production and VFX, it’s another tool on the toolbelt. There are still some completely valid use cases where bluescreen is the most cost-efficient route for shooting. Is it preferred? That might depend on the department you ask, but the use of LED screens in production has by no means removed the validity of the green/bluescreen workflow. I think the more exciting progressions in shooting against green or blue are the progressions in simulcam or the use of screens [that are] not blue or green, like the sand screens used on Dune.”

DNEG has launched DNEG 360, a new division created in partnership with Dimension Studio to provide filmmakers and content creators with a range of services including visualization, virtual production, content creation and development services, offering clients an end-to-end production partnership and a seamless transition through development, pre-production and virtual production into visual effects and post for feature film and episodic projects, advertising, music videos and more, according to the firm. Steve Griffith, Managing Director of DNEG 360, states in a press release: “DNEG 360 is the only end-to-end, real-time-powered service provider operating at this kind of scale anywhere in the world. Our experienced and talented team has delivered thousands of shots, which has allowed us to refine our approach through hands-on experience. This allows us to provide guidance, experience and continuity for our clients as they navigate the filmmaking process, reducing risk and finding cost efficiencies.” As part of the unveiling of the division, DNEG is debuting two of the world’s largest LED volume stages in London and Rome.

Brian Cohen, Head of CG, Sony Pictures Imageworks, notes, “From SPI’s perspective, virtual production is an exciting aspect of our feature animation projects, particularly in our partnership with Sony Pictures Animation. Using Epic’s Unreal Engine for visualization at all stages of production has opened the door for us to incorporate traditional cinematography techniques into our workflow. I think our biggest challenge [with virtual production] has been the cultural shift for artists and filmmakers to work in a VP capacity. While everyone loves real-time feedback, not everyone is prepared to make creative calls in a fluid, non-linear workflow.” Cohen adds, “On the feature animation side, we are defining the trends and leading our creative partners into a brave new world of interactive filmmaking with our virtual production efforts. It will take time for all filmmakers to truly be comfortable in this looser, more exploratory way of working, but ultimately, we see it as the only way forward as it provides a level of instant feedback and creative control we’ve never been able to provide previously.”

One of the biggest challenges in virtual production is expectation versus reality. “A client sees a beautiful render and assumes we can just hit play on set. But the process still requires creative planning and technical coordination,” Vū’s Moore observes. “We’ve also seen growing pains when traditional teams try to plug into a VP pipeline without the right prep. We’ve learned to lean heavily into pre-production and prototyping. Building scenes early, doing walk-throughs with the director and DP and making sure creative and tech are speaking the same language – that’s what makes the difference.” ILM’s Talley concludes, “We continue to see nexus points develop between different flavors of VP tools and techniques, where combining them and the artists that specialize in them pushes everything forward.”

“It will take time for all filmmakers to truly be comfortable in this looser, more exploratory way of working, but ultimately, we see it as the only way forward as it provides a level of instant feedback and creative control we’ve never been able to provide previously.”

—Brian Cohen, Head of CG, Sony Pictures Imageworks