By TREVOR HOGG

Images courtesy of beloFX and Apple TV.

By TREVOR HOGG

Images courtesy of beloFX and Apple TV.

A fierce California windstorm in 2018 causes a transformer to spark and ignite the most devastating wildfire in the history of state. To place audiences in the middle of the oncoming, ongoing and aftermath of the devastation, filmmaker Paul Greengrass decided to tell the story of school bus driver Kevin McKay navigating through a city and landscape filled with flames, smoke and embers. Ironically, real forest fires hindered the production and created more work for the visual effects team led by Charlie Noble, who chose beloFX as one of the vendors to bring the harrowing journey to cinematic life. The contributions of beloFX began at the previs stage, with Unreal Engine providing the means to visualize and plan the action, lighting and camera movement for various sequences. BeloFX’s work carried over to 161 shots in post-production that take place in the air with firefighting planes and on the ground as the school bus attempts to get out of a traffic jam, unknowingly heading to a school that has been consumed by the quickly spreading blaze.

Principal photography took place in Albuquerque, New Mexico, with plates captured in California. “The irony is that a lot of this was planned to be captured in-camera that ultimately couldn’t be because there were forest fires,” reveals Russ Bowen, Visual Effects Supervisor at beloFX. “They were going to use CAL FIRE, which was a big part of the film. But a lot of the aerial plates we couldn’t do because of the Park Fire that raged in 2024. That turned into big, full-CG sequences for us. BeloFX contributed massively on the previs and visualizing this as much as we could. Paul Greengrass is notorious for trying to ground everything in reality, so being able to visualize or plan the path of the fire was important.” The fire began in Pulga, progressed into Paradise and concluded in Chico. “It’s worth saying that we were in a very unusual situation,” notes Rick Leary, Head of CG at beloFX. “This came up in the middle of the darkest part of the writers’ strike, so there was no work going on as such. Charlie came to us with a film that looked like it was going to go, and we were almost the first people on the show. We thought, ‘What can we do? We can certainly visualize to a decent standard this whole terrain and map the fire out as a tech recce to work out what we wanted to do.”

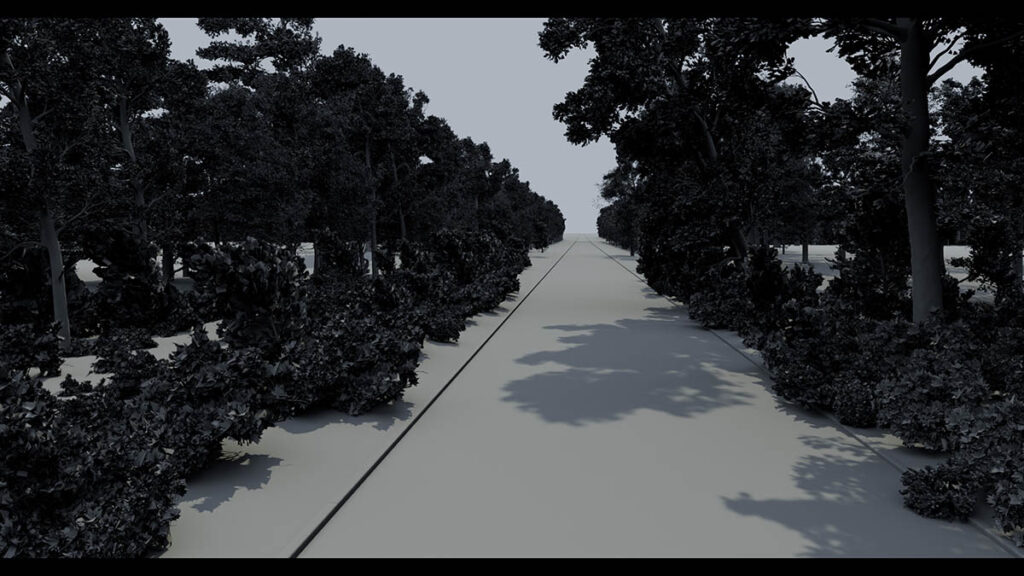

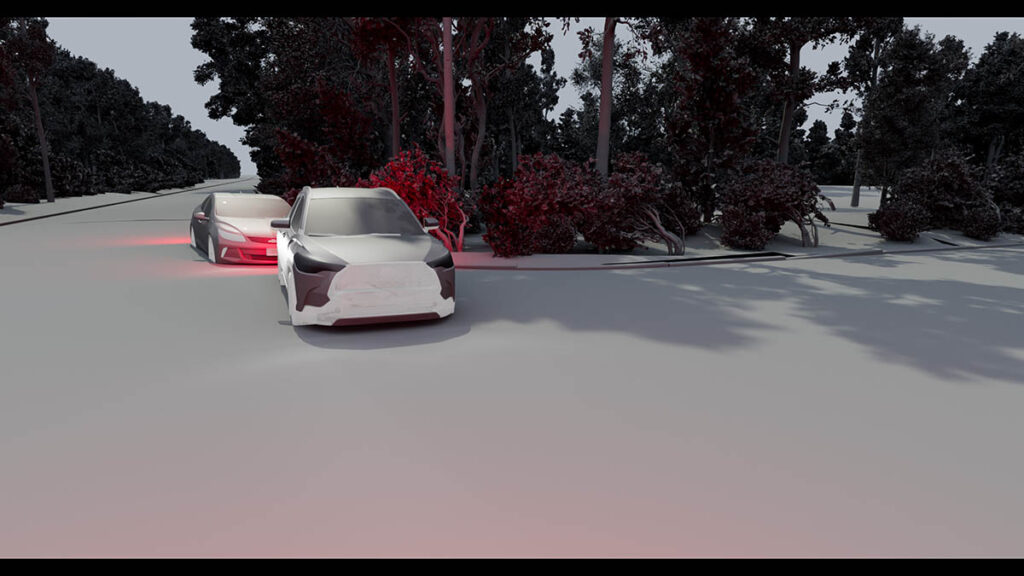

“[Traffic] is the thing that stops people from getting away. We set up a process where we could do considerable lengths of slow-moving traffic jams with hundreds of cars. This is something that Unreal Engine excels at. … The way to do it was to write a simple driving game and tape record your takes one after another so you can build up a load of movement with all of the cars moving around each other reasonably correctly and quickly that way.”

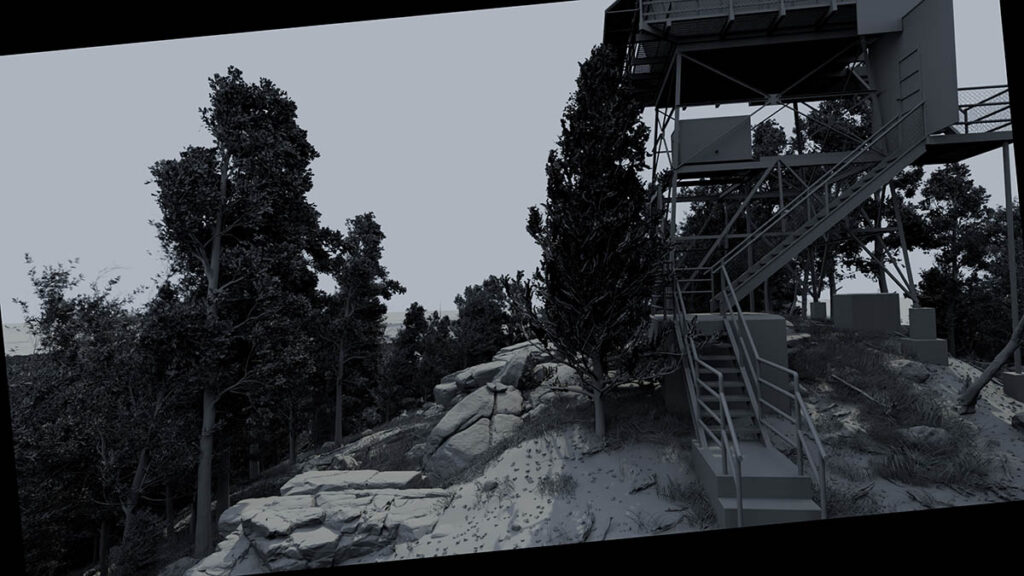

—Rick Leary, Head of CG, beloFX

At the core of the visualization imagery was the USGS (U.S. Geological Survey) 1-meter DEM data, which is a high-resolution, bare-earth elevation dataset created through LiDAR scanning. “We pulled NAIP [National Agriculture Imagery Program] surveys, which are about 60 centimeters to a pixel, not particularly high resolution but good enough to get going,” Leary explains. “We recreated 45 square kilometers in Northern California and built it in Unreal Engine. We started getting a virtual database together, such as fire and smoke simulations, and ended up with a world you could fly around and say, ‘This is what it might look like from here.’ We populated it with matchbox houses.” The camera style had to be kept in mind. “It’s Paul Greengrass, so it’s going to be a documentary style,” remarks Vic Wade, Head of 2D & Training at beloFX. “Charlie Noble came to us with this affecting YouTube footage of survivors of the real event, which is not so long ago. People still had 2K HD cameras in their pockets, and a lot of this event was captured. It’s terrifying footage. There was a lightbulb moment of it being an opportunity to do something that I’ve been keen to do for a long time, which is to visualize this event as accurately as possible in a lightweight, relatively cheap way compared to how it had been done in the past; essentially, create a digital twin version of the landscape, environment and the progression of the event, put cameras in there, and start to design shots as if you were able to recce the event as it was happening and find those key moments.”

“The darker it is means the further away from the fire they actually are, and the safer they are and feel. You feel that in the movie. You have these lulls of calm when there isn’t fire, then all of a sudden there’s a eruption of light and fire, and in the classic [director Paul] Greengrass style, everything amps up and everybody gets on the edge of their seat for an hour and a half and can’t breathe.”

—Russ Bowen, Visual Effects Supervisor, beloFX

The Ember Cam was created for the production. “Paul Greengrass said to us that the fire is the villain, so we needed to give it a character and personality,” Leary states. “It had to be a lurking monster. The previs dailies passed through us, and we were trying to figure it out. They wanted the fire to be traveling quickly, so I mapped a smooth path down a hill, swooping around as you would in CG. There’s another Unreal Engine trick where it has Vcam, which you can drive through your iPhone. You can manipulate your iPhone like this, and obviously it’s manipulating the camera feeding back to your viewport. I would literally sit myself, in the virtual sense, on this roller-coaster I just set up with the VCam and swing it around and jump up and round, pivot down and bring it around. The germ of what became the Ember Cam is me jumping around like a lunatic controlling a camera that is on a smooth path. I don’t know what the people in flats cross the road thought it!” The Ember Cam shots were toned down. “They took a step back in terms of the Ember Cam feeling because when you do the shot for real, you’re trying to get it finalized,” Bowen remarks. “You get animators involved, and they try to make this perfect camera path and take that chaos out of it. A lot of my feedback to the artist straight away was, ‘You’ve made a nice-looking, polished camera, but it shouldn’t be that. It should actually be more chaotic.’ Going back to our DNEG days, there was a Green Zone shot that was so hard that very few people could actually track it; that was because it was nighttime and Greengrass, so it was chaos and motion blur; basically, cameras chasing these guys running up the hill. I didn’t complete it. It is such a stylized camera operation that you have to get into his mindset. What helps is tracking the plate shots and creating camera profiles from them. You’re studying the rotations and movements and visualizing that as a curve, then showing that to the animators and saying, ‘Look at the mess of this. It’s all over the place and doing all these different things. Apply that methodology to your CG cameras. Take that CG edge off, and you might start getting toward the realm of a Greengrass shot.’”

Everything started from a place of authenticity and accuracy, but revisions were made for cinematic reasons. “You need to make sure that your shots are well designed and interesting as well as being authentic,” Wade states. “The process we followed on this show was a fairly unique one. It allowed us to balance that almost perfectly by working closely with Charlie over a good period of time and having the resources that we had in terms of the digital version of Paradise and all the fire assets that we had progressing through it in time. You need both of those things to tell a compelling story.” Traffic is a huge player in narrative. “It is the thing that stops people from getting away,” Leary observes. “We set up a process where we could do considerable lengths of slow-moving traffic jams with hundreds of cars. This is something that Unreal Engine excels at. I spent a lot of time driving vehicles through Paradise. I knew the road network like the back of my hand for awhile. The way to do it was to write a simple driving game and tape record your takes one after another so you can build up a load of movement with all of the cars moving around each other reasonably correctly and quickly that way.” The Ember Cam technique came in handy once again. “You would come in from high, so you would see it weave down, set the path up going between the cars almost to the ground, going up over the other side of the car, then up over the bus, and put the VCam on that path and sit there,” Leary explains. “You’re literally controlling it like that, so you’re looking at what is going to tell the story. They need to see the bus, fire and size of the traffic jam. It was almost like a two-pass process.”

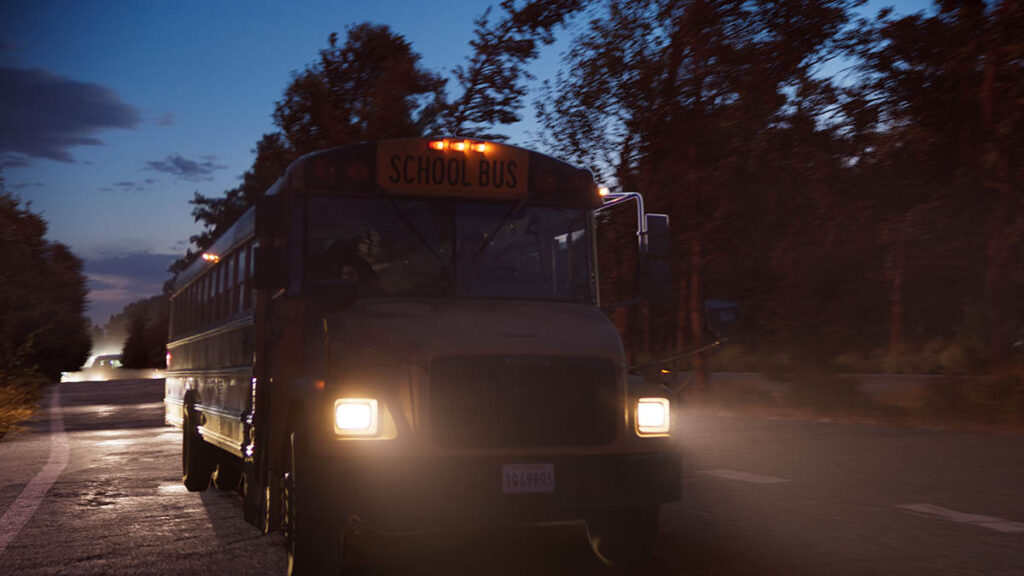

Different lighting situations had to be taken into account. “A lot of our sequences were twilight for night,” Bowen states. “The Crow sequence in particular, which is the crossroads when they’re stuck in the bus and Kevin gets out and has to redirect all the traffic. A lot of that was twilight and some were daylight shots. We had to create this progression of darkness because it starts off a little lighter as they think they’re on sort of the edge of it, and smoke is creeping in on them. Everything’s starting to get claustrophobic and dark, then all of a sudden there’s this eruption of fire as the fire shoots its way towards the bus. Some of that is obviously special effects, with the gaffers and DP on set staging interactive lighting. Interactive lighting on people and the interior of cars is complicated stuff to pull off and never really that convincing in post-production, so you try to capture as much of that as you can in-camera. One of the only positives of a scenario like this is the way that it illuminates the world. It is quite terrifyingly beautiful in that fire itself is beautiful through a lens. It did create these nice shots, and especially the backlighting was beautiful.” There is an ebb and flow between the darkness and light. “The darker it is means the further away from the fire they actually are, and the safer they are and feel,” Bowen remarks. “You feel that in the movie. You have these lulls of calm when there isn’t fire, then all of a sudden there’s a eruption of light and fire, and in the classic Greengrass style, everything amps up and everybody gets on the edge of their seat for an hour and a half and can’t breathe.”

Heavy winds cause a transformer to spark and set the world on fire. “What probably will shock or surprise a lot of people is the sheer number of visual effects shots in the show,” Bowen notes. “You can shoot all of the plates you want, but nothing will match the level of wind that was present at the real location when this fire happened. You’re talking about gusts of wind from 60 to 100 miles an hour, which is insane. It was this perfect storm. We got all of this reference of gale force winds that people were filming in the days leading up to the fire and realized early on that was going to be a big part of the visual effects. You can put all of the wind machines you want into a small location, and can blow around the clothes on people and blow detritus through the shots, and that helps. But what do you do about the mid-ground and background where the trees aren’t moving, or if they are moving, certainly not keeling over, which is essentially what a lot of this reference showed us? While we can focus on fire and smoke, a lot of the effects simulation stuff was actually trees and foliage.”

Memory was an issue because of the vast vegetation. “When you’ve got a single tree blowing around and have to do dynamics of the branches, twigs, leaves and pines, the simulation gets heavy quickly,” Bowen states. “Now do that for a million trees. It’s like crowds but with groom all over it that is blowing in the wind, and also setting it on fire and interacting with smoke. You’re lighting it differently from multiple sources. It was a bit of a nightmare if you think about it! While all of this previsualization was going on and Rick was showing all of this beautiful work, there was a big team in the background trying to work out, ‘This all looks great out of Unreal Engine. How do we do this now, simulate and render it and export it from Unreal Engine, which is good at handling all of that stuff, into Houdini and Solaris’? The effects team came up with this great way of managing memory. It took a long time to get there, and we came across hurdles in the road. We were experimenting and learning a lot as we went along. Cities and buildings have simplicity to them in that they are hard materials and don’t move that much, unless you’re destroying them!”

Driving the believability is the performance of the cast. “It’s a testament to the actors for being able to visualize that because the plate doesn’t have any of that stuff in there,” Bowen states. “Special effects can pump atmospherics into a scene, but I’ve been on locations where when you do that, that stuff dissipates quickly. Our job was easier because the hardest part is making it believable with the characters, and that step was already done.” There was an overall grade and exposure reduction of everything because there was always a hint of light in the sky. “What was working well was what was happening on the characters,” Bowen remarks. “If you don’t have that [light] and you’re trying to add that interactivity, things start to break. Or, if you have to execute the work, it’s expensive, tedious and takes so long to do that you ultimately don’t do it; or it doesn’t get done to a level that is actually believable. Whenever I’m on location supervising any of that work, that is one of my key takeaways. Things I like to focus on with the lighting gaffer and DP are what’s happening on the characters themselves. Everything else you can do relatively quickly; it’s not too tedious to change the lighting on the bus or ground. We were replacing the entire environment, so what was saved from the plates were only the characters and the bus. Even with that, we were changing the reflections on the bus. The most important thing is what interactive light is happening on the characters. If you nail that, then visual effects can handle everything else.”