By TREVOR HOGG

By TREVOR HOGG

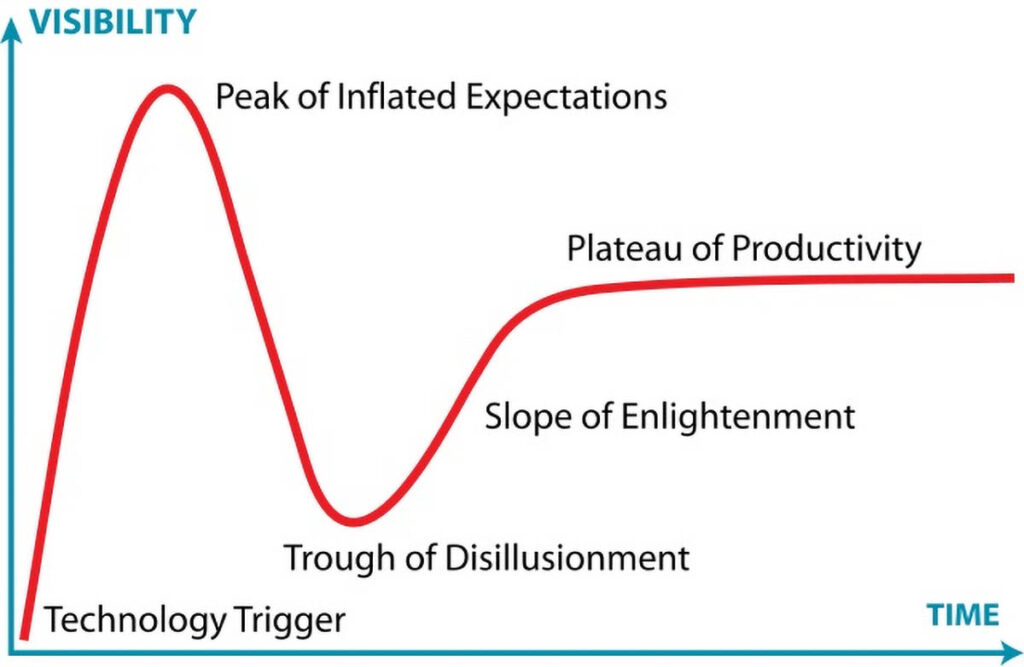

In 1995, a year after being hired by the American research and advisory firm Gartner, analyst Jackie Fenn introduced the ‘Gartner Hype Cycle,’ which follows the trials and tribulations that innovations encounter before being fully embraced and adopted by society. Where does AI fit within this? Given the number of startups flooding the visual effects industry with AI solutions, the rapidly advancing technology has entered the stage known as the ‘Peak of Inflated Expectations,’ making separating genuine innovation from the hype even more difficult.

Among the innovators most likely to remain when the dust settles are automated rotoscoping company Batch; AI production platform Voia; Flawless, which developed DeepEditor, an AI-assisted performance and dialogue editing platform for film, TV and commercials; Intangible.ai and its AI platform with spatial intelligence that enables creatives to build 3D scenes without prompts; Wonder Dynamics, an Autodesk company responsible for the AI tool Autodesk Flow Studio that automatically animates, lights and composes CG characters into live-action scenes.

“The whole point is art directing, building your own scene exactly the way you want it, and directing exactly the shot you want with a real actor who can do whatever you want to do. On top of that, you can get something in a matter of minutes to hours just by using a set of AI models that can support everything.”

—Noam Malai, Chief AI Officer and Co-Founder, Voia

Upon hearing that The Revenant had rotoscoped two characters throughout the movie, and struggled to separate the skin tones of basketball players against the wooden floors in Hustle, Colorist Seth Ricart partnered with Cinematographer Zak Mulligan to see if AI tools could provide an economical solution, leading to the founding of Batch. “Our current status is roto for people in the shot and depth maps that are stabilized temporally,” explains Seth Ricart, Co-Founder of Batch. “The frames are processed all at once, so it’s not going to jump around in the Z axis. We see these as tools that help filmmakers make films. It’s not replacing filmmakers but helping people do their work better and faster. This is being used on films that would never get roto. The ability to do roto on the whole film for the people would be cost-prohibitive. It’s for part of the industry that doesn’t exist right now.” The development process has been gradual. Ricart explains, “We talked to different developers to figure out which direction we should head. As we worked on it, we started with a MVP [minimally viable product]. Once we had that going, we were able to figure out where we had to fine-tune and where the models were failing us. We have been developing the data set based on the failures, and rotoscoping as we go the old way to show how it should be done.”

There are those who believe that AI will render the human element obsolete in filmmaking. “You just sit at your laptop, do some prompts and image to video,” notes Noam Malai, Chief AI Officer and Co-Founder of Voia. “That might be true at some point in time, but not soon, mainly because there is still a huge gap between acting and prompting. From day one, we built our own virtual studio that you can use to generate huge amounts of synthetic data sets. You can control everything, build data for any task or model, and create the state of the models for your needs.” Voia Studio is available as an iPhone app and was built using an established filmmaking methodology. Malai notes, “Our approach was to take virtual production but make it AI-enabled so you can easily create environments, preview yourself in that environment and take that into postvis. We even have our models that can take it to final pixels. The whole point is art directing, building your own scene exactly the way you want it, and directing exactly the shot you want with a real actor who can do whatever you want to do. On top of that, you can get something in a matter of minutes to hours just by using a set of AI models that can support everything. You don’t need to do all of those manual processes like state-of-the-art rotoscoping, relighting and color correction. You can blend everything close to final pixels. When you go to post-production with Nuke or Resolve, you don’t need to spend tens or hundreds of thousands of dollars. It’s already there.”

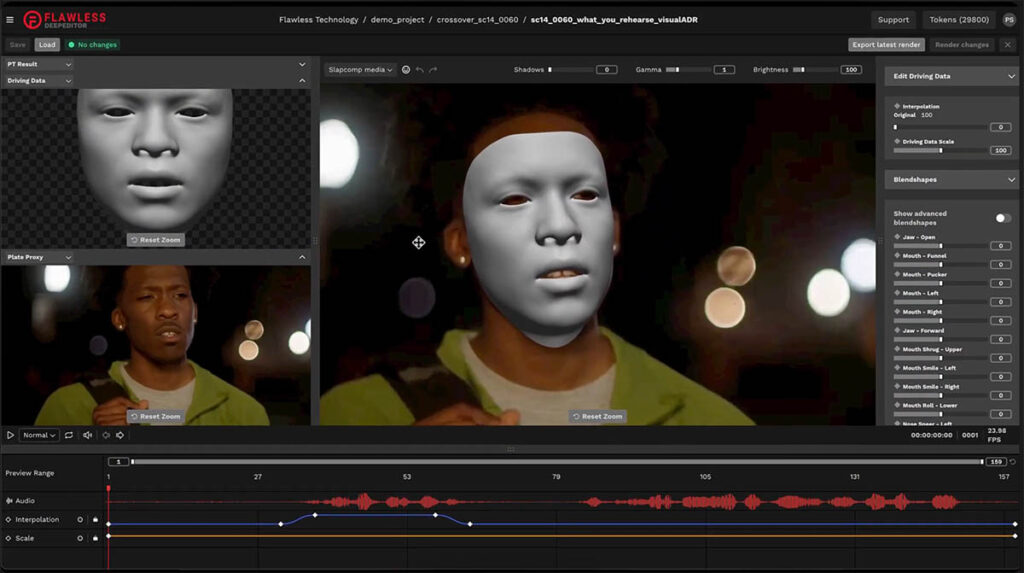

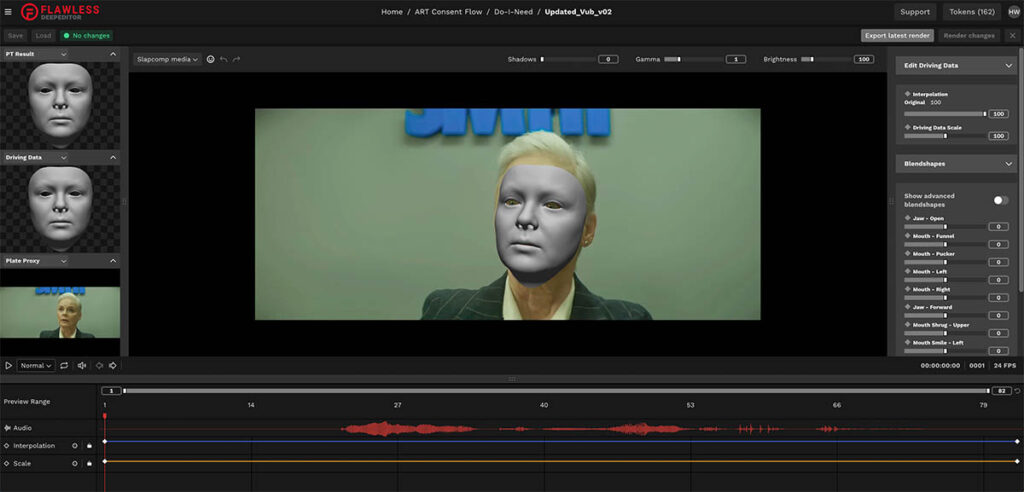

Flawless specializes in syncing the facial mannerisms with dialogue. “Flawless is creating tools for filmmakers that allow for the re-articulation of the mouth,” states Eric Wilson, Senior Director of VFX and Image Pipeline at Flawless. “We don’t have anything to do with the audio itself, but we do take in new audio, whether that has been re-recorded by the actor or recorded by a different actor speaking in a different language; we can apply that to the mouth movement so it’s correct for that particular line. What we’re trying to do is to stay as true to the actor’s original performance. You temper your cadence so that it matches what has already been said and try to find words that fit within the same period of time. What happens is you’ll put emphasis on words, and when people talk, their eyebrows go up and down, cheeks move and the head bobs, and often those are directly tied to the language that they’re speaking. Suppose you have a different language, like French, where the word order suddenly changes, and you have an actor emphasizing a particular word that now falls in a different place in the sentence. In that case, you have a mismatch in performance dynamics. We’re looking at that and asking, ‘How can we keep our new version true to those acting moments?’” To accomplish that, there is some digital augmentation in the area of the mouth. Wilson says, “Our software works by first putting 3D geometry onto the face that gets perfectly tracked, matched and fitted to the face, including the beard. Then we have lip animation that happens. It gets driven by the audio. A big part of our technology that is different from is that you can go in and refine the animation of the lips. We can train on as little as one shot, so you can make each shot look exactly correct without having to feed it a ton of other training data. As long as you can see the inside of the mouth, you’re good to go.”

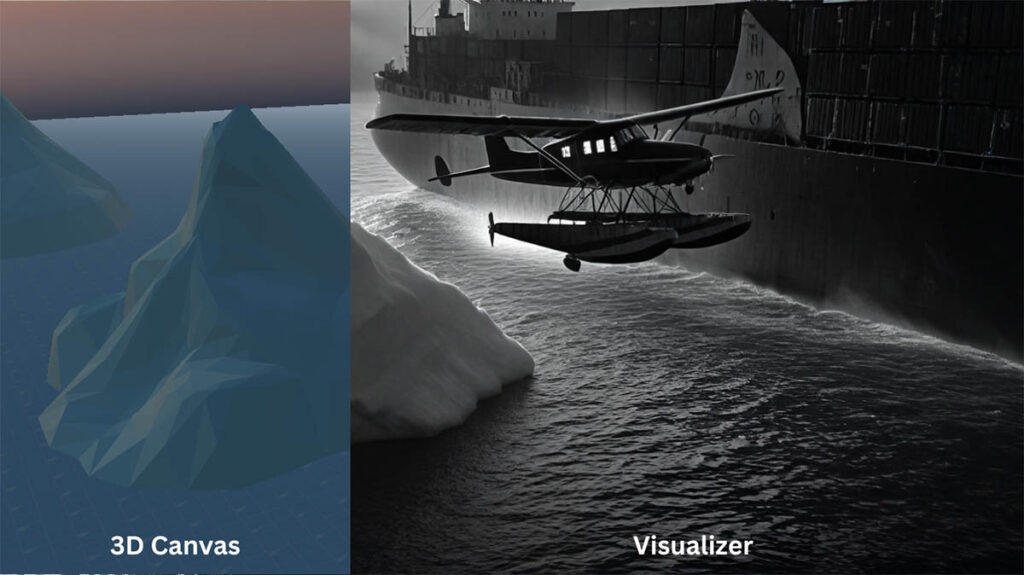

Generative AI is at its best when enabling, rather than taking away from, creative minds with a lifetime of expertise. “I put together a theory of how you could apply generative AI and package it in a simple and fast tool to author and interact in 3D,” explains Charles Migos, Co-Founder & Chief Product Officer at Intangible.ai. “I used that as the basis of creative communication, most notably during the pre-production phases where it matters, because what you decide there affects 100% of what will happen in production and post-production.” Developing an effective and efficient UI is not hard. Migos explains, “If you pay careful attention to how creatives operate and look at the process around dailies and how they discuss the creative work at hand, it becomes easy to theorize about how you would create a workflow that will suit the creative discourse itself; while not trying to be Maya where everything is there because you don’t know what is going to be the next needed thing in the system, even though most users only use 30% of the system capabilities of a tool of that caliber. The rest is net waste utility. We’ve come from a viewpoint that you don’t need to learn how to model, texture, rig, animate, light, or do all the things that are required when using traditional pipeline tools today. Let’s make it one that allows you to keep a high level of representation of the narrative but gives exacting control on how you break that narrative down over time into scenes, shot composition and sequences of shots that are meant to tell the story.”

“We see these as tools that help filmmakers to make films. It’s not replacing filmmakers but helping people to do their work better and faster. This is being used on films that would never get roto. The ability to do roto on the whole film for all the people would be cost-prohibitive. It’s for part of the industry that doesn’t exist right now.”

—Seth Ricart, Co-Founder, Batch

The desire of an actor and visual effects supervisor to write a script about robotics set in the near future made them contemplate what character creation tools would be needed without having access to a blockbuster budget, and they set about developing a software program that Autodesk could not ignore. “Tye Sheridan [Co-Founder of Wonder Dynamics] and I were studying a lot about robotics, which turned into research on how to make tools to cut costs,” recalls Nikola Todorovic, Co-Founder of Wonder Dynamics. “We wanted to have robot characters, so we started looking for ways to accelerate motion capture because we couldn’t afford a big mocap studio and knew that visual effects is tedious work. We looked into self-driving car technology, which is all about computer vision. The basis for many of these digital models is, ‘What am I looking at in my video? Can I understand where the human is, the camera’s movement and what is behind it? Is it a wide shot or a close-up?’ We started building these tools by saying, ‘Let’s break down a shot as much as we can and then we can control it better.’ Then we realized this was bigger and decided to turn it into a platform.” Everything has to be precise, so there is a heavy reliance on synthetic data sets. Todorovic says, “One of the biggest issues with our solution is that it’s mathematically based, and if you only feed it footage, it’s hard to estimate, ‘Did they raise their arm two or three inches?’ That’s crucial information when you’re training these models. In that CG environment, I know exactly the position of my camera, actor and light. That’s how self-driving cars are trained. They build these virtual worlds because of the math needed.” For context, the decision to incorporate AI and machine learning was made in 2017, before ChatGPT became a hot topic. “I knew machine learning would be a crucial part of our industry when we started digging deeper into that technology. We had a moment where we sat down and asked, ‘What’s the worst thing that could happen?’ Tye and I both said, ‘We’ll learn what the future of filmmaking is going to be before everyone else.’ That’s a good investment.”